Auto Labeling

因為在標記時常會花費很多的時間和力氣,現在市面上有許多auto labeling的工具,例如前一陣子meta有發表一個模型,還引起注目叫做SAM: https://segment-anything.com/

以下為一個簡單的使用範例

from segment_anything import sam_model_registry, SamAutomaticMaskGenerator, SamPredictor

import matplotlib.pyplot as plt

import cv2

import numpy as np

def show_anns(anns):

if len(anns) == 0:

return

sorted_anns = sorted(anns, key=(lambda x: x['area']), reverse=True)

ax = plt.gca()

ax.set_autoscale_on(False)

img = np.ones((sorted_anns[0]['segmentation'].shape[0], sorted_anns[0]['segmentation'].shape[1], 4))

img[:,:,3] = 0

for ann in sorted_anns:

m = ann['segmentation']

color_mask = np.concatenate([np.random.random(3), [0.35]])

img[m] = color_mask

ax.imshow(img)

image = cv2.imread('./train/IMG_5208_jpg.rf.d85b8d233845117f0362c17ca2222c21.jpg')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

sam_checkpoint = "sam_vit_h_4b8939.pth"

model_type = "vit_h"

device = "cpu"

sam = sam_model_registry[model_type](checkpoint=sam_checkpoint)

sam.to(device=device)

mask_generator = SamAutomaticMaskGenerator(sam)

masks = mask_generator.generate(image)

print(len(masks))

print(masks[0].keys())

plt.figure(figsize=(20,20))

plt.imshow(image)

show_anns(masks)

plt.axis('off')

plt.show()

成果如下:

Roboflow的智慧圈選工具

在Roboflow也有類似的智慧圈選工具,可以自動為我們圈選目標的形狀,使用方式如下

使用現有模型標記YOLO格式label

但是若我們想要使用既有的模型訓練一些新的圖片,在新的圖片,若要標記一些常見的物品,如汽車、人、機車等…。這些東西因為在YOLO這種模型,預設的偵測狀況就很不錯了,有的時候為了要讓標記更快速,可以使用現有模型把預測的結果轉為標記檔案,再匯入Roboflow等標記軟體檢視標記狀況並修正錯誤的標記,會可以使標記工作更輕鬆。

預測結果轉標記程式碼

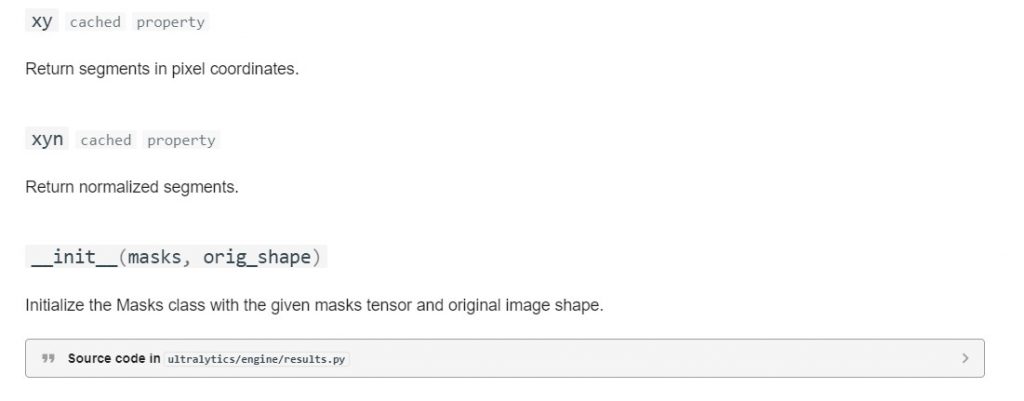

這邊是預測的result的相關文件: https://docs.ultralytics.com/reference/engine/results/#ultralytics.engine.results.Results.tojson

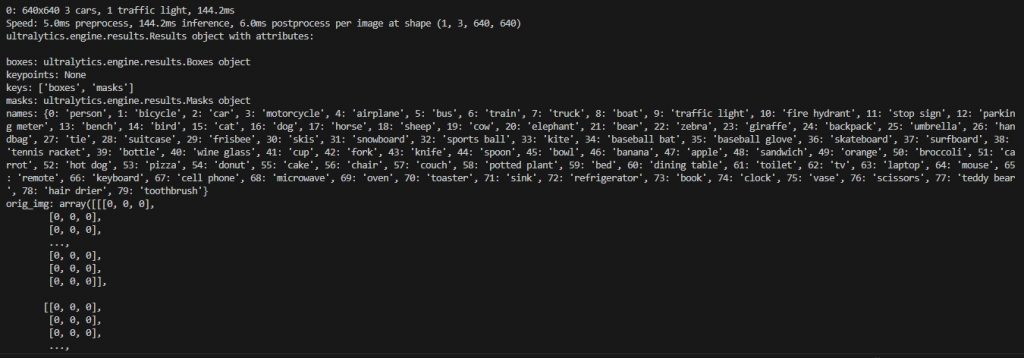

我真的覺得yolov8做的很用心的點,在於他的說明超級清楚,尤其是在程式碼本身上面,我們可以單單藉由下面程式碼印出詳細返回的物件結構,然後就可以了解該如何取得我們所需的物件資訊

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8n.pt') # pretrained YOLOv8n model

# Run batched inference on a list of images

results = model(['im1.jpg', 'im2.jpg']) # return a list of Results objects

# Process results list

for result in results:

boxes = result.boxes # Boxes object for bbox outputs

masks = result.masks # Masks object for segmentation masks outputs

keypoints = result.keypoints # Keypoints object for pose outputs

probs = result.probs # Probs object for classification outputs

print(masks )

從API我們可以得知,若我們使用的是yolo-seg,則吐回的座標資訊可參考這個返回值

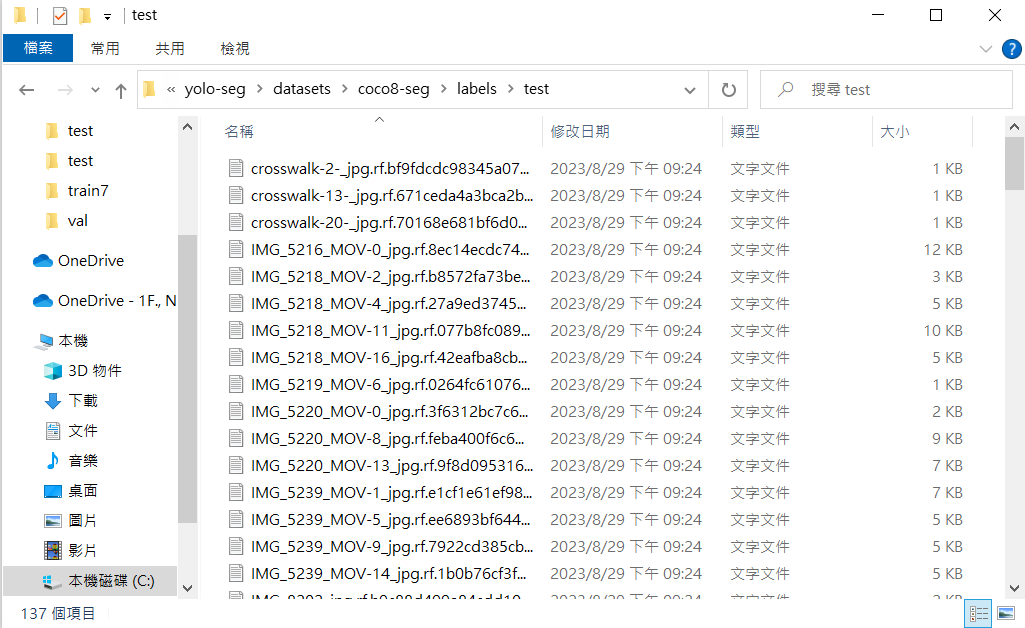

完整預測結果轉標記的程式範例

設定folder_path到images的資料夾,label會放到相對應的labels資料夾下

from ultralytics import YOLO

from PIL import Image

import cv2

import os

# 資料夾路徑

folder_path = './datasets/coco8-seg/images/train'

images = []

# 確保資料夾存在

if not os.path.exists(folder_path):

print("資料夾不存在")

else:

# 取得資料夾內所有檔案

file_list = os.listdir(folder_path)

# 遍歷每個檔案

for filename in file_list:

# 確保檔案是圖片檔案(可根據您的需求調整)

if filename.lower().endswith(('.png', '.jpg', '.jpeg')):

# 構建完整的檔案路徑

file_path = os.path.join(folder_path, filename)

images.append(file_path)

# Load a model

model = YOLO('yolov8n-seg.pt') # pretrained YOLOv8n model

# Run batched inference on a list of images

results = model(images) # return a list of Results objects

# Show the results

for r in results:

formatted_string = ""

if r is not None and r.masks is not None:

for i in range(len(r.masks.xyn)):

mask = r.masks.xyn[i]

cls = int(r.boxes.cls[i].item())

formatted_rows = []

formatted_rows.append(cls)

for row in mask:

formatted_rows.append(row[0])

formatted_rows.append(row[1])

formatted_string = ((formatted_string + '\n') if formatted_string != "" else "") + " ".join(str(x) for x in formatted_rows)

with open(r.path.replace('.jpg', '.txt').replace('images', 'labels'), "a") as file:

file.write(formatted_string)

把YOLO格式轉為COCO格式

請參考此專案: https://github.com/Taeyoung96/Yolo-to-COCO-format-converter/tree/master